Introduction

A standard problem in computer vision is deriving the minimum bounding box (MBB) for a user, and is often the first step in many computer vision applications. A MBB can be defined as the smallest rectangle completely enclosing a set of points. The task of identifying a MBB for a user becomes trivial with the Kinect SDK, and is accomplished by processing the depth frame data returned from the sensor.

Implementation

The XAML for the UI of the application is shown below. The code highlighted in yellow is responsible for displaying the video stream and overlaying it with the MBB. The MBB is a Rectangle that binds to a number of properties to control it’s location and size. It uses a converter (not shown) to control when the MBB is visible.

<Window x:Class="KinectDemo.MainWindow"xmlns="http://schemas.microsoft.com/winfx/2006/xaml/presentation"xmlns:x="http://schemas.microsoft.com/winfx/2006/xaml"xmlns:conv="clr-namespace:KinectDemo.Converters"Title="Minimum Bounding Box" ResizeMode="NoResize" SizeToContent="WidthAndHeight"Loaded="Window_Loaded" Closed="Window_Closed"><Grid><Grid.Resources><conv:BooleanToVisibilityConverter x:Key="boolVis" /></Grid.Resources><Grid.ColumnDefinitions><ColumnDefinition Width="Auto" /><ColumnDefinition Width="200" /></Grid.ColumnDefinitions><StackPanel Grid.Column="0"><TextBlock Margin="0,10,0,10"HorizontalAlignment="Center"Text="Video Stream" /><Viewbox Margin="10,0,10,10"><Grid Height="240"Width="320"><Image Source="{Binding ColourBitmap}" /><Rectangle Height="{Binding Box.Height}"HorizontalAlignment="Left"RadiusX="5"RadiusY="5"Stroke="Red"StrokeThickness="2"VerticalAlignment="Top"Visibility="{Binding Path=IsUserDetected,Converter={StaticResource boolVis}}"Width="{Binding Box.Width}"><Rectangle.RenderTransform><TranslateTransform X="{Binding Box.X}"Y="{Binding Box.Y}" /></Rectangle.RenderTransform></Rectangle></Grid></Viewbox></StackPanel><StackPanel Grid.Column="1"><GroupBox Header="Motor Control"Height="100"VerticalAlignment="Top"Width="190"><StackPanel HorizontalAlignment="Center"Margin="10"Orientation="Horizontal"><Button x:Name="motorUp"Click="motorUp_Click"Content="Up"Height="30"Width="70" /><Button x:Name="motorDown"Click="motorDown_Click"Content="Down"Height="30"Margin="10,0,0,0"Width="70" /></StackPanel></GroupBox><GroupBox Header="Information"Height="100"VerticalAlignment="Top"Width="190"><StackPanel Orientation="Horizontal" Margin="10"><TextBlock Text="Frame rate: " /><TextBlock Text="{Binding FramesPerSecond}"VerticalAlignment="Top"Width="50" /></StackPanel></GroupBox></StackPanel></Grid></Window>

The constructor initializes the StreamManager class (contained in my KinectManager library), which handles the stream processing. The DataContext of MainWindow is set to kinectStream for binding purposes, and the UseBoundingBox property of the StreamManager class is set to true so that bounding box processing occurs.

public MainWindow(){InitializeComponent();this.kinectStream = new StreamManager();this.DataContext = this.kinectStream;this.kinectStream.UseBoundingBox = true;}

The Window_Loaded event handler initializes the three required subsystems of the Kinect pipeline, and initialises the smoothing and filtering parameters that will be applied to the skeleton tracking data returned from the sensor. Finally, event handlers are registered for when a depth frame is available, and when a video frame is available. Each event handler simply invokes a method in the StreamManager class to process the received data.

private void Window_Loaded(object sender, RoutedEventArgs e){this.runtime = new Runtime();try{this.runtime.Initialize(RuntimeOptions.UseDepthAndPlayerIndex |RuntimeOptions.UseSkeletalTracking |RuntimeOptions.UseColor);this.cam = runtime.NuiCamera;}catch (InvalidOperationException){MessageBox.Show("Runtime initialization failed. Ensure Kinect is plugged in");return;}try{this.runtime.DepthStream.Open(ImageStreamType.Depth, 2,ImageResolution.Resolution320x240, ImageType.DepthAndPlayerIndex);this.runtime.VideoStream.Open(ImageStreamType.Video, 2,ImageResolution.Resolution640x480, ImageType.Color);}catch (InvalidOperationException){MessageBox.Show("Failed to open stream. Specify a supported image type/resolution.");return;}this.kinectStream.LastTime = DateTime.Now;this.runtime.SkeletonEngine.TransformSmooth = true;var parameters = new TransformSmoothParameters{Smoothing = 0.75f,Correction = 0.0f,Prediction = 0.0f,JitterRadius = 0.05f,MaxDeviationRadius = 0.04f};this.runtime.SkeletonEngine.SmoothParameters = parameters;this.runtime.DepthFrameReady +=new EventHandler<ImageFrameReadyEventArgs>(runtime_DepthFrameReady);this.runtime.VideoFrameReady +=new EventHandler<ImageFrameReadyEventArgs>(runtime_VideoFrameReady);}

Three important properties from the StreamManager class are shown below. UseBoundingBox is set to true if the MBB should be derived when processing depth frame data. Box is an instance of my MinimumBoundingBox type (not shown here). While Int32Rect seems like a natural type to use to represent a MBB, there is no property change notification built in, hence creating the MinimumBoundingBox type. IsUserDetected is set to true by ConvertDepthFrame if a user is detected from the depth frame data, and is bound to from the Visibility property of the Rectangle in the UI (thus only making the MBB visible when a user is detected).

public bool UseBoundingBox { get; set; }public MinimumBoundingBox Box { get; private set; }public bool IsUserDetected { get; private set; }

GetDepthStream is a method in the StreamManager class. This method takes the depth data, and converts it by invoking the ConvertDepthFrame method.

public void GetDepthStream(ImageFrameReadyEventArgs e){if (depthFrame32 == null){depthFrame32 = new byte[e.ImageFrame.Image.Width *e.ImageFrame.Image.Height * 4];}PlanarImage image = e.ImageFrame.Image;byte[] depthFrame = ConvertDepthFrame(image.Bits);this.DepthBitmap = BitmapSource.Create(image.Width, image.Height,96, 96, PixelFormats.Bgr32, null, depthFrame, image.Width * 4);this.OnPropertyChanged("DepthBitmap");}

ConvertDepthFrame converts a 16-bit grey scale depth frame into a 32-bit frame. It does this by transforming the 13-bit depth information into an 8-bit intensity value. If the UseBoundingBox property is set to true, it also derives the MBB values and sets the Box properties to the derived values.

private byte[] ConvertDepthFrame(byte[] depthFrame16){int topLeftX = int.MaxValue;int topLeftY = int.MaxValue;int bottomRightX = int.MinValue;int bottomRightY = int.MinValue;bool isUserDetected = false;for (int i16 = 0, i32 = 0;i16 < depthFrame16.Length && i32 < depthFrame32.Length;i16 += 2, i32 += 4){int user = depthFrame16[i16] & 0x07;int realDepth = (depthFrame16[i16 + 1] << 5) | (depthFrame16[i16] >> 3);byte intensity = (byte)(255 - (255 * realDepth / 0x0fff));if (this.UseBoundingBox){if (user != 0){int y = (i32 / 4) / this.DepthBitmap.PixelWidth;int x = (i32 / 4) - this.DepthBitmap.PixelWidth * y;if (x < topLeftX)topLeftX = x;if (x > bottomRightX)bottomRightX = x;if (y < topLeftY)topLeftY = y;if (y > bottomRightY)bottomRightY = y;isUserDetected = true;}}depthFrame32[i32 + RED_IDX] = (byte)(intensity / 2);depthFrame32[i32 + GREEN_IDX] = (byte)(intensity / 2);depthFrame32[i32 + BLUE_IDX] = (byte)(intensity / 2);}if (this.UseBoundingBox){if (topLeftX != int.MaxValue){this.Box.X = topLeftX;}if (bottomRightX != int.MinValue){this.Box.Width = bottomRightX - topLeftX;}if (topLeftY != int.MaxValue){this.Box.Y = topLeftY;}if (bottomRightY != int.MinValue){this.Box.Height = bottomRightY - topLeftY;}if (isUserDetected != IsUserDetected){this.IsUserDetected = isUserDetected;this.OnPropertyChanged("IsUserDetected");}}return this.depthFrame32;}

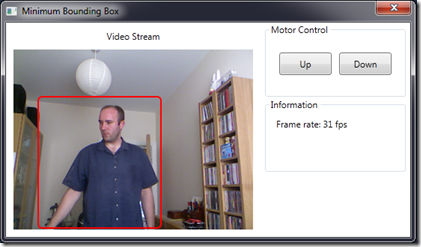

The application is shown below. Once a user is identified from the depth stream returned from the Kinect sensor, the MBB is derived and the Rectangle representing the MBB is made visible over the video stream. As the user moves, the MBB changes it’s size and location.

Conclusion

The Kinect for Windows SDK beta from Microsoft Research is a starter kit for application developers. It allows access to the Kinect sensor, and experimentation with its features. The sensor provides depth data to the application which can be used to accurately derive a minimum bounding box for a user. Deriving a minimum bounding box for a user is the first step in developing a security application that is capable of identifying users based upon their skeletal data.

2 comments:

"KinectDemo.Converters" can't find...

I didn't show it in the post due to it being standard code. The KinectDemo.Converters namespace contains a class called BooleanToVisibility converter, which takes a boolean value and if it's equal to true returns Visibility.Visible, otherwise Visibility.Collapsed. The code is shown below:

using System;

using System.Globalization;

using System.Windows;

using System.Windows.Data;

namespace KinectDemo.Converters

{

[ValueConversion(typeof(bool), typeof(Visibility))]

public class BooleanToVisibilityConverter : IValueConverter

{

public BooleanToVisibilityConverter()

{

}

public object Convert(object value, Type targetType, object parameter, CultureInfo culture)

{

bool flag = false;

if (value is bool)

{

flag = (bool)value;

}

else if (value is bool?)

{

bool? nullable = (bool?)value;

flag = nullable.HasValue ? nullable.Value : false;

}

return flag ? Visibility.Visible : Visibility.Collapsed;

}

public object ConvertBack(object value, Type targetType, object parameter, CultureInfo culture)

{

throw new NotImplementedException();

}

}

}

Post a Comment