Introduction

Previous blog posts have looked at a number of topics including simple gesture recognition, skeleton tracking, pose recognition, and smoothing skeleton data. It’s now time to link these topics together in order to produce a robust and extensible gesture recognizer, that can be used in different NUI (natural user interface) applications.

My high-level approach to the gesture recognition process will be as follows:

- Detect whether the user is moving or stationary.

- Detect the start of a gesture (a posture).

- Capture the gesture.

- Detect the end of a gesture (a posture).

- Identify the gesture.

A gesture can be thought of as a sequence of points. The coordinates of these points will define a distance to the sensor and a gesture recognizer will have to recognize a gesture regardless of the distance to the sensor. Therefore, it will be necessary to scale gestures to a common reference. It will then be possible to robustly identify gestures captured at any distance from the sensor.

There are a large number of algorithmic solutions for gesture recognition, and I will write more about this in a future blog post. The focus of this post is on detecting whether the user is moving or stationary. This can be undertaken by examining the center of mass of the user.

The center of mass is the mean location of all the mass in a system, and is also known as the barycenter. The common definition of barycenter comes from astrophysics, where it is the center of mass where two or more celestial bodies orbit each other. The barycenter of a shape is the intersection of all straight lines that divide the shape into two parts of equal moment about the line. Therefore, it can be thought of as the mean of all points of the shape.

The application documented here uses a barycenter in order to determine if the user is moving (stable) or stationary (not stable).

Implementation

The XAML for the UI is shown below. An Image shows the video stream from the sensor, with a Canvas being used to overlay the skeleton of the user on the video stream. A Slider now controls the elevation of the sensor via bindings to a Camera class, thus eliminating some of the references to the UI from the code-behind file. TextBlocks are bound to IsSkeletonTracking and IsStable, to show if the skeleton is being tracked, and if it is stable, respectively.

<Window x:Class="KinectDemo.MainWindow"xmlns="http://schemas.microsoft.com/winfx/2006/xaml/presentation"xmlns:x="http://schemas.microsoft.com/winfx/2006/xaml"xmlns:conv="clr-namespace:KinectDemo.Converters"Title="Gesture Recognition" ResizeMode="NoResize" SizeToContent="WidthAndHeight"Loaded="Window_Loaded" Closed="Window_Closed"><Grid><Grid.ColumnDefinitions><ColumnDefinition Width="Auto" /><ColumnDefinition Width="300" /></Grid.ColumnDefinitions><StackPanel Grid.Column="0"><TextBlock HorizontalAlignment="Center"Text="Tracking..." /><Viewbox><Grid ClipToBounds="True"><Image Height="300"Margin="10,0,10,10"Source="{Binding ColourBitmap}"Width="400" /><Canvas x:Name="skeletonCanvas" /></Grid></Viewbox></StackPanel><StackPanel Grid.Column="1"><GroupBox Header="Motor Control"Height="100"VerticalAlignment="Top"Width="290"><Slider x:Name="elevation"AutoToolTipPlacement="BottomRight"IsSnapToTickEnabled="True"LargeChange="10"Maximum="{Binding ElevationMaximum}"Minimum="{Binding ElevationMinimum}"HorizontalAlignment="Center"Orientation="Vertical"SmallChange="3"TickFrequency="3"Value="{Binding Path=ElevationAngle, Mode=TwoWay}" /></GroupBox><GroupBox Header="Information"Height="200"VerticalAlignment="Top"Width="290"><GroupBox.Resources><conv:BooleanToStringConverter x:Key="boolStr" /></GroupBox.Resources><StackPanel><StackPanel Orientation="Horizontal"Margin="10"><TextBlock Text="Frame rate: " /><TextBlock Text="{Binding FramesPerSecond}"VerticalAlignment="Top"Width="50" /></StackPanel><StackPanel Margin="10,0,0,0"Orientation="Horizontal"><TextBlock Text="Tracking skeleton: " /><TextBlock Text="{Binding IsSkeletonTracking,Converter={StaticResource boolStr}}"VerticalAlignment="Top"Width="30" /></StackPanel><StackPanel Orientation="Horizontal"Margin="10"><TextBlock Text="Stable: " /><TextBlock Text="{Binding Path=IsStable,Converter={StaticResource boolStr}}"VerticalAlignment="Top"Width="30" /></StackPanel></StackPanel></GroupBox></StackPanel></Grid></Window>

The StreamManager class (inside my KinectManager library) contains properties and backing store for IsSkeletonTracking and IsStable. It also contains an instance of the BaryCenter class.

private bool? isStable = null;private bool isSkeletonTracking;private readonly BaryCenter baryCenter = new BaryCenter();public bool IsSkeletonTracking{get { return this.isSkeletonTracking; }private set{this.isSkeletonTracking = value;this.OnPropertyChanged("IsSkeletonTracking");if (this.isSkeletonTracking == false){this.IsStable = null;}}}public bool? IsStable{get { return this.isStable; }private set{this.isStable = value;this.OnPropertyChanged("IsStable");}}

The SkeletonFrameReady event handler hooks into a method in the StreamManager class called GetSkeletonStream. It retrieves a frame of skeleton data and processes it as follows: e.SkeletonFrame.Skeletons is an array of SkeletonData structures, each of which contains the data for a single skeleton. If the TrackingState field of the SkeletonData structure indicates that the skeleton is being tracked, the IsSkeletonTracking property is set to true, and the position of the skeleton is added to a collection in the BaryCenter class. Then the IsStable method of the BaryCenter class is invoked to determine if the user is stable or not. Finally, the IsStable property is updated.

public void GetSkeletonStream(SkeletonFrameReadyEventArgs e){bool stable = false;foreach (var skeleton in e.SkeletonFrame.Skeletons){if (skeleton.TrackingState == SkeletonTrackingState.Tracked){this.IsSkeletonTracking = true;this.baryCenter.Add(skeleton.Position, skeleton.TrackingID);stable = this.baryCenter.IsStable(skeleton.TrackingID) ? true : false;}}this.IsStable = stable;}

The Vector class in the Microsoft.Research.Kinect.Nui namespace is currently lacking many useful vector operations, including basic vector arithmetic. Therefore I defined extension methods to calculate the length of a vector, and to subtract one vector from another. The extension methods are currently defined in a Helper class. Extension methods enable you to “add” methods to existing types without creating a new derived type, or otherwise modifying the original type. There is no difference between calling an extension method and the methods that are actually defined in a type.

public static float Length(this Vector vector){return (float)Math.Sqrt(vector.X * vector.X +vector.Y * vector.Y +vector.Z * vector.Z);}public static Vector Subtract(this Vector left, Vector right){return new Vector{X = left.X - right.X,Y = left.Y - right.Y,Z = left.Z - right.Z,W = left.W - right.W};}

The Add method and IsStable method of the BaryCenter class are shown below. The Add method stores skeleton positions in a circular queue of type Dictionary, where each entry in the Dictionary is an int and a List of Vectors. The IsStable method returns a boolean value indicating whether the user is stable or not. If there are not enough skeleton positions stored in the Dictionary it returns false. Otherwise it subtracts each of the stored skeleton position vectors from the latest skeleton position vector, and gets the vector length of the resulting subtraction. If the vector length is greater than a threshold it returns false. Otherwise it returns true.

public void Add(Vector position, int trackingID){if (!this.positions.ContainsKey(trackingID)){this.positions.Add(trackingID, new List<Vector>());}this.positions[trackingID].Add(position);if (this.positions[trackingID].Count > this.windowSize){this.positions[trackingID].RemoveAt(0);}}public bool IsStable(int trackingID){List<Vector> currentPositions = positions[trackingID];if (currentPositions.Count != this.windowSize){return false;}Vector current = currentPositions[currentPositions.Count - 1];for (int i = 0; i < currentPositions.Count - 2; i++){Vector result = currentPositions[i].Subtract(current);float length = result.Length();if (length > this.Threshold){return false;}}return true;}

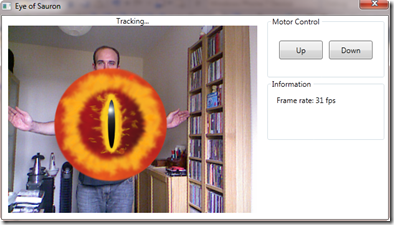

The application is shown below. It uses skeleton tracking to derive whether the user is moving or stationary, and indicates this on the UI.

Conclusion

The Kinect for Windows SDK beta from Microsoft Research is a starter kit for application developers. It allows access to the Kinect sensor, and experimentation with its features. The first part of my gesture recognition process is to determine whether the user is moving or stationary, and is performed with the BaryCenter class. Coupled with pose recognition, this will produce a robust indication of whether a gesture is being made or not.