Introduction

My previous posts about the Kinect SDK examined different aspects of the SDK functionality:

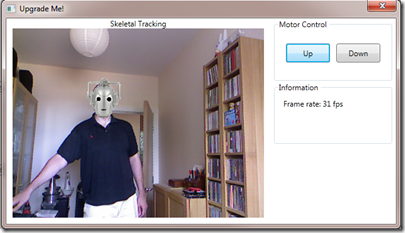

In this post, I’ll build on these topics by integrating them into one application, the purpose of which is to ‘upgrade’ a person into a cyberman. The application will use speech recognition to recognise the word upgrade, then track the skeleton of the user in order to identify their head, before superimposing a cyber helmet over their head. The cyber helmet will continue to be superimposed while the user is tracked.

Implementation

The first step in implementing this application (after creating a new WPF project) is to include a reference to Microsoft.Research.Kinect. This assembly is in the GAC, and calls unmanaged functions from managed code. I then developed a basic UI, using XAML, that displays the video stream from the sensor, allows motor control for fine tuning the sensor position, and displays the frame rate. The code is shown below. MainWindow.xaml is wired up to the Window_Loaded and Window_Closed events, and contains a Canvas that will be used to display the cyber head. A ColorAnimation is used to give the appearance of the word ‘upgrade’ flashing over the video stream.

<Window x:Class="KinectDemo.MainWindow"xmlns="http://schemas.microsoft.com/winfx/2006/xaml/presentation"xmlns:x="http://schemas.microsoft.com/winfx/2006/xaml"Title="Upgrade Me!" ResizeMode="NoResize" SizeToContent="WidthAndHeight"Loaded="Window_Loaded" Closed="Window_Closed"><Grid><Grid.ColumnDefinitions><ColumnDefinition Width="Auto" /><ColumnDefinition Width="200" /></Grid.ColumnDefinitions><StackPanel Grid.Column="0"><TextBlock HorizontalAlignment="Center"Text="Skeletal Tracking" /><Image x:Name="video"Height="300"Margin="10,0,10,10"Width="400" /><Canvas x:Name="canvas"Height="300"Margin="10,-310,10,10"Width="400"><Image x:Name="head"Height="70"Source="Images/cyberman.png"Visibility="Collapsed"Width="70" /><TextBlock x:Name="upgradeText"Canvas.Left="57"Canvas.Top="101"FontSize="72"Foreground="Transparent"Text="Upgrade"><TextBlock.Triggers><EventTrigger RoutedEvent="TextBlock.Loaded"><EventTrigger.Actions><BeginStoryboard><Storyboard BeginTime="00:00:02"RepeatBehavior="Forever"Storyboard.TargetProperty="(Foreground).(SolidColorBrush.Color)"><ColorAnimation From="Transparent" To="White" Duration="0:0:1.5" /></Storyboard></BeginStoryboard></EventTrigger.Actions></EventTrigger></TextBlock.Triggers></TextBlock></Canvas></StackPanel><StackPanel Grid.Column="1"><GroupBox Header="Motor Control"Height="100"VerticalAlignment="Top"Width="190"><StackPanel HorizontalAlignment="Center"Margin="10"Orientation="Horizontal"><Button x:Name="motorUp"Click="motorUp_Click"Content="Up"Height="30"Width="70" /><Button x:Name="motorDown"Click="motorDown_Click"Content="Down"Height="30"Margin="10,0,0,0"Width="70" /></StackPanel></GroupBox><GroupBox Header="Information"Height="100"VerticalAlignment="Top"Width="190"><StackPanel Orientation="Horizontal" Margin="10"><TextBlock Text="Frame rate: " /><TextBlock x:Name="fps"Text="0 fps"VerticalAlignment="Top"Width="50" /></StackPanel></GroupBox></StackPanel></Grid></Window>

The class-level declarations are shown below. They are largely an amalgamation of the declarations from my previous blog posts. Note that the Upgrade property uses a Dispatcher object to marshal data back onto the UI thread. This is because the Upgrade property will only be set from the audio capture thread.

private Runtime nui;private Camera cam;private int totalFrames = 0;private int lastFrames = 0;private DateTime lastTime = DateTime.MaxValue;private Thread t;private KinectAudioSource source;private SpeechRecognitionEngine sre;private Stream stream;private const string RecognizerId = "SR_MS_en-US_Kinect_10.0";private bool upgrade;private bool Upgrade{get { return this.upgrade; }set{this.upgrade = value;if (this.upgrade == true){this.Dispatcher.Invoke(DispatcherPriority.Normal,new Action(() =>{this.upgradeText.Visibility = Visibility.Collapsed;this.head.Visibility = Visibility.Visible;}));}else{this.Dispatcher.Invoke(DispatcherPriority.Normal,new Action(() =>{this.head.Visibility = Visibility.Collapsed;this.upgradeText.Visibility = Visibility.Visible;}));}this.OnPropertyChanged("Upgrade");}}

As before, the constructor starts a new MTA thread to start the audio capture process. For an explanation of why an MTA thread is required, see this previous post.

public MainWindow(){InitializeComponent();this.t = new Thread(new ThreadStart(RecognizeAudio));this.t.SetApartmentState(ApartmentState.MTA);this.t.Start();}

The RecognizeAudio method handles audio capture and speech recognition. For an explanation of how this code works, see this previous post.

private void RecognizeAudio(){this.source = new KinectAudioSource();this.source.FeatureMode = true;this.source.AutomaticGainControl = false;this.source.SystemMode = SystemMode.OptibeamArrayOnly;RecognizerInfo ri = SpeechRecognitionEngine.InstalledRecognizers().Where(r => r.Id == RecognizerId).FirstOrDefault();if (ri == null){return;}this.sre = new SpeechRecognitionEngine(ri.Id);var word = new Choices();word.Add("upgrade");var gb = new GrammarBuilder();gb.Culture = ri.Culture;gb.Append(word);var g = new Grammar(gb);this.sre.LoadGrammar(g);this.sre.SpeechRecognized += new EventHandler<SpeechRecognizedEventArgs>(sre_SpeechRecognized);this.sre.SpeechRecognitionRejected += new EventHandler<SpeechRecognitionRejectedEventArgs>(sre_SpeechRecognitionRejected);this.stream = this.source.Start();this.sre.SetInputToAudioStream(this.stream, new SpeechAudioFormatInfo(EncodingFormat.Pcm, 16000, 16, 1, 32000, 2, null));this.sre.RecognizeAsync(RecognizeMode.Multiple);}private void sre_SpeechRecognitionRejected(object sender, SpeechRecognitionRejectedEventArgs e){this.Upgrade = false;}private void sre_SpeechRecognized(object sender, SpeechRecognizedEventArgs e){this.Upgrade = true;}

The Window_Loaded event handler creates the NUI runtime object, opens the video and skeletal streams, and registers the event handlers that the runtime calls when a video frame is ready, and when a skeleton frame is ready.

private void Window_Loaded(object sender, RoutedEventArgs e){this.nui = new Runtime();try{nui.Initialize(RuntimeOptions.UseColor | RuntimeOptions.UseSkeletalTracking);this.cam = nui.NuiCamera;}catch (InvalidOperationException){MessageBox.Show("Runtime initialization failed. Ensure Kinect is plugged in");return;}try{this.nui.VideoStream.Open(ImageStreamType.Video, 2, ImageResolution.Resolution640x480, ImageType.Color);}catch (InvalidOperationException){MessageBox.Show("Failed to open stream. Specify a supported image type/resolution.");return;}this.lastTime = DateTime.Now;this.nui.VideoFrameReady += new EventHandler<ImageFrameReadyEventArgs>(nui_VideoFrameReady);this.nui.SkeletonFrameReady += new EventHandler<SkeletonFrameReadyEventArgs>(nui_SkeletonFrameReady);}

The code for the nui_VideoFrameReady method can be found in this previous post, as can the code for CalculateFPS.

If the Upgrade property is true, nui_SkeletonFrameReady retrieves a frame of skeleton data. e.SkeletonFrame.Skeletons is an array of SkeletonData structures, each of which contains the data for a single skeleton. If the TrackingState field of the SkeletonData structure indicates that the skeleton is being tracked, a loop enumerates all the joints in the SkeletonData structure. If the joint ID is equal to the head, getDisplayPosition is called in order to convert coordinates in skeleton space to image space. Then the image of the cyber helmet is drawn on the Canvas at the coordinates returned by getDisplayPosition.

private void nui_SkeletonFrameReady(object sender, SkeletonFrameReadyEventArgs e){if (this.Upgrade == true){foreach (SkeletonData data in e.SkeletonFrame.Skeletons){if (SkeletonTrackingState.Tracked == data.TrackingState){foreach (Joint joint in data.Joints){if (joint.Position.W < 0.6f){return;}if (joint.ID == JointID.Head){var point = this.getDisplayPosition(joint);Canvas.SetLeft(head, point.X);Canvas.SetTop(head, point.Y);}}}}}}

The getDisplayPosition method converts coordinates in skeleton space to coordinates in image space. For an explanation of how this code works, see this previous post.

private Point getDisplayPosition(Joint joint){int colourX, colourY;float depthX, depthY;this.nui.SkeletonEngine.SkeletonToDepthImage(joint.Position, out depthX, out depthY);depthX = Math.Max(0, Math.Min(depthX * 320, 320));depthY = Math.Max(0, Math.Min(depthY * 240, 240));ImageViewArea imageView = new ImageViewArea();this.nui.NuiCamera.GetColorPixelCoordinatesFromDepthPixel(ImageResolution.Resolution640x480, imageView,(int)depthX, (int)depthY, 0, out colourX, out colourY);return new Point((int)(this.canvas.Width * colourX / 640)-40, (int)(this.canvas.Height * colourY / 480)-30);}

The Window_Closed event handler simply stops the audio capture from the Kinect sensor, disposes of the resources for the capture stream, aborts the thread, stops the recognition process and terminates the speech recognition engine, and uninitializes the NUI.

private void Window_Closed(object sender, EventArgs e){if (this.source != null){this.source.Stop();}if (this.stream != null){this.stream.Dispose();}if (this.t != null){this.t.Abort();}if (this.sre != null){this.sre.RecognizeAsyncStop();}nui.Uninitialize();}

The application is shown below. When the user says ‘upgrade’, and provided that the skeleton tracking has identified a person, a cyber helmet is placed over the identified users head, with the helmet still covering the users head as they are tracked. While the logic isn’t flawless, it does show what can be achieved with a relatively small amount of code.

Conclusion

The Kinect for Windows SDK beta from Microsoft Research is a starter kit for application developers. It allows access to the Kinect sensor, and experimentation with its features. The sensor provides skeleton tracking data to the application, that can be used to accurately overlay an image on a specific part of a person in real time.