Introduction

The Kinect for Windows SDK beta from Microsoft Research is a starter kit for application developers. It enables access to the Kinect sensor, and experimentation with its features. Specifically it enables experimentation with:

- Skeletal tracking for the image of one or two persons who are moving within the Kinect sensor's field of view.

- XYZ-depth camera for accessing a standard colour camera stream plus depth data to indicate the distance of the object from the Kinect sensor.

- Audio processing for a four-element microphone array with acoustic noise and echo cancellation, plus beam formation to identify the current sound source and integration with the Microsoft.Speech speech recognition API.

The first in this series of blog posts will examine how to implement a basic managed Kinect application that gets video and depth data, transforms it, and displays it.

Background

The NUI (Natural User Interface) API is the core of the Kinect for Windows API. It supports, amongst other items:

- Access to the Kinect sensors that are connected to the computer.

- Access to image and depth data streams from the Kinect image sensors.

- Delivery of a processed version of image and depth data to support skeletal tracking.

The NUI API processes data from the Kinect sensor through a multi-stage pipeline. At initialization, you must specify the subsystems that it uses, so that the runtime can start the required portions of the pipeline. An application can choose one or more of the following options:

- Colour - the application streams colour image data from the sensor.

- Depth - the application streams depth image data from the sensor.

- Depth and player index - the application streams depth data from the sensor and requires the player index that the skeleton tracking engine generates.

- Skeleton - the application uses skeleton position data.

Stream data is delivered as a succession of still-image frames. At NUI initialization, the application identifies the streams it plans to use. It then opens streams with additional stream-specific details, including stream resolution, image type etc.

Colour Data

The colour data is available in two formats:

- RGB - 32-bit, linear RGB-formatted colour bitmaps, in sRGB colour space.

- YUV - 16-bit, gamma-corrected linear YUV-formatted colour bitmaps.

Depth Data

The depth data stream provides frames in which the high 13 bits of each pixel gives the distance, in mm, to the nearest object. The following depth data streams are supported:

- 640x480 pixels

- 320x240 pixels

- 80x60 pixels

Applications can process data from a depth stream to support various custom features, such as tracking users' motions or identifying background objects to ignore during application play.

Implementing a Managed Application

The application documented here simply gets the video and depth data from the Kinect sensor and displays it, and copies the video data into a grey scale representation, which it also displays.

The first step in implementing this application is (after creating a new WPF project), is to include a reference to Microsoft.Research.Kinect. This assembly is in the GAC, and calls unmanaged functions from managed code. To use the NUI API you must then import the Microsoft.Research.Kinect.Nui namespace to your application.

I then built a basic UI, using XAML, that uses the Image control to display the video, grey scale video, and depth data. The code can be seen below. MainWindow.xaml is wired up to the Loaded and Closed events.

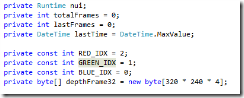

MainWindow.xaml.cs contains the following class-level declarations:

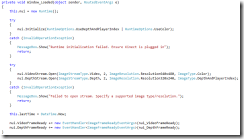

The code for the Window_Loaded event handler is shown below. The code creates a new version of the Kinect runtime, and then initializes it to use the depth and colour video subsystems. It then opens both the video and depth streams, and specifies the resolutions to use in both cases. Finally, it registers event handlers to be invoked each time a video frame and depth frame is ready on the sensor.

The code for the nui_VideoFrameReady event handler is shown below. It creates a planar image from the received image frame, and displays it in the Image control named video, that is defined in the XAML. It then converts the image frame to grey scale, before displaying it in the Image control named greyVideo, that is defined in the XAML.

The code for convertVideoFrameToGreyScale is shown below. This takes the RGB colour image data and converts it to grey scale, using the well-known equation. Note: in the event of wanting to display a grey scale video stream, it may be more efficient to access the video stream from the sensor in YUV format, and just display the Y channel. I used the approach shown here to demonstrate how to manipulate the pixel data for the image.

The code for the nui_DepthFrameReady event handler is shown below. This takes the depth data, and converts it for display, before displaying it. The code also calculates a frames-per-second value that is displayed in the application (to denote how many frames-per-second are being processed).

The code for convertDepthFrame is shown below. This code converts a 16-bit grey scale depth frame into a 32-bit frame for display. It does this by transforming the 13-bit depth information into an 8-bit intensity value for display.

The code for the Window_Closed event handler is shown below. This simply uninitalizes the NUI (releasing resources etc.) and closes the environment.

Conclusion

The Kinect for Windows SDK beta from Microsoft Research is a starter kit for application developers. It enables access to the Kinect sensor, and experimentation with its features. The SDK is simple to use and provides access to the raw sensor data in an easy-to-use format.

No comments:

Post a Comment