Introduction

Gesture recognition has long been a research area within computer science, and has seen an increased focus in the last decade due to the development of different devices including smart phones and Microsoft Surface. It’s aim is to allow people to interface with a device and interact naturally without any mechanical devices. Multi-touch devices use gestures to perform various actions; for instance a pinch gesture is commonly used to scale content such as images. In this post I will outline the development of a small application that performs simple gesture recognition in order to scale an image using the user’s hands. The application uses skeleton tracking to recognise the user’s hands, then places an image of the Eye of Sauron in the center of the user’s hands. As the user moves their hands apart the size of the image increases, and as the user closes their hands the size of the image decreases.

Implementation

The XAML for the UI of the application is shown below. A Canvas contains an Image that is used to display the Eye of Sauron. The Image uses a TranslateTransform to control the location of the eye, and a ScaleTransform to control the size of the eye. The properties of both RenderTransforms are set using bindings, as is the Visibility property of the Image.

<Window x:Class="KinectDemo.MainWindow"xmlns="http://schemas.microsoft.com/winfx/2006/xaml/presentation"xmlns:x="http://schemas.microsoft.com/winfx/2006/xaml"Title="Eye of Sauron" ResizeMode="NoResize" SizeToContent="WidthAndHeight"Loaded="Window_Loaded" Closed="Window_Closed"><Grid><Grid.ColumnDefinitions><ColumnDefinition Width="Auto" /><ColumnDefinition Width="200" /></Grid.ColumnDefinitions><StackPanel Grid.Column="0"><TextBlock HorizontalAlignment="Center"Text="Tracking..." /><Image Height="300"Margin="10,0,10,10"Source="{Binding ColourBitmap}"Width="400" /><Canvas x:Name="canvas"Height="300"Margin="10,-310,10,10"Width="400"><Image x:Name="eye"Height="400"Width="400"Source="Images/SauronEye.png"Visibility="{Binding ImageVisibility}"><Image.RenderTransform><TransformGroup><TranslateTransform X="{Binding TranslateX}"Y="{Binding TranslateY}" /><ScaleTransform CenterX="{Binding CenterX}"CenterY="{Binding CenterY}"ScaleX="{Binding ScaleFactor}"ScaleY="{Binding ScaleFactor}" /></TransformGroup></Image.RenderTransform></Image></Canvas></StackPanel><StackPanel Grid.Column="1"><GroupBox Header="Motor Control"Height="100"VerticalAlignment="Top"Width="190"><StackPanel HorizontalAlignment="Center"Margin="10"Orientation="Horizontal"><Button x:Name="motorUp"Click="motorUp_Click"Content="Up"Height="30"Width="70" /><Button x:Name="motorDown"Click="motorDown_Click"Content="Down"Height="30"Margin="10,0,0,0"Width="70" /></StackPanel></GroupBox><GroupBox Header="Information"Height="100"VerticalAlignment="Top"Width="190"><StackPanel Orientation="Horizontal" Margin="10"><TextBlock Text="Frame rate: " /><TextBlock Text="{Binding FramesPerSecond}"VerticalAlignment="Top"Width="50" /></StackPanel></GroupBox></StackPanel></Grid></Window>

The constructor initializes the StreamManager class (contained in my KinectManager library), which handles the stream processing. The DataContext of MainWindow is set to kinectStream for binding purposes.

public MainWindow(){InitializeComponent();this.kinectStream = new StreamManager();this.DataContext = this.kinectStream;}

The Window_Loaded event handler initializes the required subsystems of the Kinect pipeline, and invokes SmoothSkeletonData (which has now moved to the StreamManager class). For an explanation of what the SmoothSkeletonData method does, see this previous post. Finally, event handlers are registered for the subsystems of the Kinect pipeline.

private void Window_Loaded(object sender, RoutedEventArgs e){this.runtime = new Runtime();try{this.runtime.Initialize(RuntimeOptions.UseColor |RuntimeOptions.UseSkeletalTracking);this.cam = runtime.NuiCamera;}catch (InvalidOperationException){MessageBox.Show("Runtime initialization failed. Ensure Kinect is plugged in");return;}try{this.runtime.VideoStream.Open(ImageStreamType.Video, 2,ImageResolution.Resolution640x480, ImageType.Color);}catch (InvalidOperationException){MessageBox.Show("Failed to open stream. Specify a supported image type/resolution.");return;}this.kinectStream.KinectRuntime = this.runtime;this.kinectStream.LastTime = DateTime.Now;this.kinectStream.SmoothSkeletonData();this.runtime.VideoFrameReady +=new EventHandler<ImageFrameReadyEventArgs>(nui_VideoFrameReady);this.runtime.SkeletonFrameReady +=new EventHandler<SkeletonFrameReadyEventArgs>(nui_SkeletonFrameReady);}

The SkeletonFrameReady event handler invokes the GetSkeletonStream method in the StreamManager class, and passes a number of parameters into it.

private void nui_SkeletonFrameReady(object sender, SkeletonFrameReadyEventArgs e){this.kinectStream.GetSkeletonStream(e,(int)this.eye.Width,(int)this.eye.Height,(int)this.canvas.Width,(int)this.canvas.Height);}

Several important properties from the StreamManager class are shown below. The CenterX and CenterY properties are bound to from the ScaleTransform in the UI, and specify the point that is the center of the scale operation. The ImageVisibility property is bound to from the Image control in the UI, and is used to control the visibility of the image of the eye. The KinectRuntime property is used to access the runtime instance from MainWindow.xaml.cs. The ScaleFactor property is bound to from the ScaleTransform in the UI, and is used to resize the image of the eye by the factor specified. The TranslateX and TranslateY properties are bound to from the TranslateTransform in the UI, and are used to specify the location of the image of the eye.

public float CenterX{get{return this.centerX;}private set{this.centerX = value;this.OnPropertyChanged("CenterX");}}public float CenterY{get{return this.centerY;}private set{this.centerY = value;this.OnPropertyChanged("CenterY");}}public Visibility ImageVisibility{get{return this.imageVisibility;}private set{this.imageVisibility = value;this.OnPropertyChanged("ImageVisibility");}}public Runtime KinectRuntime { get; set; }public float ScaleFactor{get{return this.scaleFactor;}private set{this.scaleFactor = value;this.OnPropertyChanged("ScaleFactor");}}public float TranslateX{get{return this.translateX;}private set{this.translateX = value;this.OnPropertyChanged("TranslateX");}}public float TranslateY{get{return this.translateY;}private set{this.translateY = value;this.OnPropertyChanged("TranslateY");}}

The GetSkeletonStream method, in the StreamManager class, is shown below. It retrieves a frame of skeleton data and processes it as follows: e.SkeletonFrame.Skeletons is an array of SkeletonData structures, each of which contains the data for a single skeleton. If the TrackingState field of the SkeletonData structure indicates that the skeleton is being tracked, the image of the eye is made visible and the handLeft and handRight Vectors are set to the positions of the left and right hands, respectively. The center point between the hands is then calculated as a Vector. The CenterX and CenterY properties are then set to the image space coordinates returned from GetDisplayPosition. The TranslateX and TranslateY properties are then set to the location to display the image of the eye at. Finally, the ScaleFactor property is set to the distance between the right and left hands.

public void GetSkeletonStream(SkeletonFrameReadyEventArgs e, int imageWidth,int imageHeight, int canvasWidth, int canvasHeight){Vector handLeft = new Vector();Vector handRight = new Vector();JointsCollection joints = null;foreach (SkeletonData data in e.SkeletonFrame.Skeletons){if (SkeletonTrackingState.Tracked == data.TrackingState){this.ImageVisibility = Visibility.Visible;joints = data.Joints;handLeft = joints[JointID.HandLeft].Position;handRight = joints[JointID.HandRight].Position;break;}else{this.ImageVisibility = Visibility.Collapsed;}}// Find center between handsVector position = new Vector{X = (handLeft.X + handRight.X) / (float)2.0,Y = (handLeft.Y + handRight.Y) / (float)2.0,Z = (handLeft.Z + handRight.Z) / (float)2.0};// Convert depth space co-ordinates to image spaceVector displayPosition = this.GetDisplayPosition(position,canvasWidth, canvasHeight);this.CenterX = displayPosition.X;this.CenterY = displayPosition.Y;// Position image at center of handsdisplayPosition.X = (float)(displayPosition.X -(imageWidth / 2));displayPosition.Y = (float)(displayPosition.Y -(imageHeight / 2));this.TranslateX = displayPosition.X;this.TranslateY = displayPosition.Y;// Derive the scale factor from distance between// the right hand and the left handthis.ScaleFactor = handRight.X - handLeft.X;}

Skeleton data and image data are based on different coordinate systems. Therefore, it is necessary to convert coordinates in skeleton space to image space, which is what the GetDisplayPosition method does. Skeleton coordinates in the range [-1.0, 1.0] are converted to depth coordinates by calling SkeletonEngine.SkeletonToDepthImage. This method returns x and y coordinates as floating-point numbers in the range [0.0, 1.0]. The floating-point coordinates are then converted to values in the 320x240 depth coordinate space, which is the range that NuiCamera.GetColorPixelCoordinatesFromDepthPixel currently supports. The depth coordinates are then converted to colour image coordinates by calling NuiCamera.GetColorPixelCoordinatesFromDepthPixel. This method returns colour image coordinates as values in the 640x480 colour image space. Finally, the colour image coordinates are scaled to the size of the canvas display in the application, by dividing the x coordinate by 640 and the y coordinate by 480, and multiplying the results by the height or width of the canvas display area, respectively.

private Vector GetDisplayPosition(Vector position, int width, int height){int colourX, colourY;float depthX, depthY;this.KinectRuntime.SkeletonEngine.SkeletonToDepthImage(position,out depthX, out depthY);depthX = Math.Max(0, Math.Min(depthX * 320, 320));depthY = Math.Max(0, Math.Min(depthY * 240, 240));ImageViewArea imageView = new ImageViewArea();this.KinectRuntime.NuiCamera.GetColorPixelCoordinatesFromDepthPixel(ImageResolution.Resolution640x480,imageView,(int)depthX,(int)depthY,0,out colourX,out colourY);return new Vector{X = (float)width * colourX / 640,Y = (float)height * colourY / 480};}

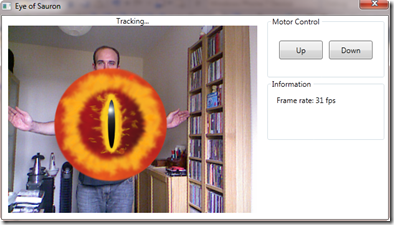

The application is shown below. It uses skeleton tracking to recognise the user’s hands, and once they are recognised it makes the Eye of Sauron visible and places it between the user’s hands. As the user moves their hands apart the size of the eye increases, and as the user closes their hands the size of the eye decreases. Therefore, scaling of the image occurs in response to the application recognizing the moving hand gesture.

Conclusion

The Kinect for Windows SDK beta from Microsoft research is a starter kit for application developers. It allows access to the Kinect sensor, and experimentation with its features. The skeleton tracking data returned from the sensor makes it easy to perform simple gesture recognition. The next step will be to generalise and extend the simple gesture recognition into a GestureRecognition class.

6 comments:

Hi there,

I encounter a problem as below.

'Vector' is an ambiguous reference between 'System.Windows.Vector' and 'Microsoft.Research.Kinect.Nui.Vector'

Can you advice how to fix it?

Thanks - Eric

Sure. You'll no doubt have imported the Microsoft.Research.Kinect.Nui namespace into your app. You'll also need an import as follows:

using Vector = Microsoft.Research.Kinect.Nui.Vector;

This will remove the ambiguity between using System.Windows.Vector (a 2D vector) and Microsoft.Research.Kinect.Nui.Vector (a 3D vector).

I tried but I have problems with the class. Do you mind sharing the demo project here? I'm a newbie in Kinect SDK and thanks for your tutorials.

Sure. You can access a zip of the code at:

https://skydrive.live.com/redir.aspx?cid=1b98b9a3cc9eb1aa&resid=1B98B9A3CC9EB1AA!153&authkey=KJVwhBsFrcM%24

Hi David,

I tried to access your skydrive but the link return error. Can you reshare the link? Thanks.

Hi David,

I manage to access the link. Need to use internet explorer to open it. -_- Sorry and really appreciate your help on this. Thanks.

Post a Comment