Introduction

A previous post documented an application that ‘upgraded’ a person into a cyberman. The application used speech recognition to recognise the word upgrade, then tracked the skeleton of the user in order to identify their head, before superimposing a cyber helmet over their head. The cyber helmet continued to be superimposed while the user was tracked. However, an omission in this application was that regardless of the distance from the Kinect sensor, the cyber helmet remained a fixed width and height that covered the user’s head only for a set distance away. As the user approached the sensor their head would show around the cyber helmet. In this blog post I will describe how I’ve incorporated depth data into the application, to scale the width and height of the cyber helmet based upon the distance the user is from the sensor.

Implementation

Previously the Image control that defined the cyber head was of a fixed size. Here, it’s changed to use a MaxHeight and MaxWidth of 150. The rest of the XAML is as in the previous post.

<Image x:Name="head"MaxHeight="150"MaxWidth="150"Source="Images/cyberman.png"Visibility="Collapsed" />

The Kinect sensor does not have sufficient resolution to ensure consistent accuracy of the skeleton tracking data, over time. This problem manifests itself as the data seeming to vibrate around their positions. However, the Kinect SDK provides an algorithm for filtering and smoothing incoming data from the sensor, which can be seen highlighted in the Window_Loaded event handler below. The parameters can be manipulated to provide the required level of filtering and smoothing for your desired user experience.

private void Window_Loaded(object sender, RoutedEventArgs e){this.runtime = new Runtime();try{this.runtime.Initialize(RuntimeOptions.UseColor |RuntimeOptions.UseSkeletalTracking);this.cam = runtime.NuiCamera;}catch (InvalidOperationException){MessageBox.Show("Runtime initialization failed. Ensure Kinect is plugged in");return;}try{this.runtime.VideoStream.Open(ImageStreamType.Video, 2,ImageResolution.Resolution640x480, ImageType.Color);}catch (InvalidOperationException){MessageBox.Show("Failed to open stream. Specify a supported image type/resolution.");return;}this.runtime.SkeletonEngine.TransformSmooth = true;var parameters = new TransformSmoothParameters{Smoothing = 1.0f,Correction = 0.1f,Prediction = 0.1f,JitterRadius = 0.05f,MaxDeviationRadius = 0.05f};this.runtime.SkeletonEngine.SmoothParameters = parameters;this.kinectStream.LastTime = DateTime.Now;this.runtime.VideoFrameReady +=new EventHandler<ImageFrameReadyEventArgs>(nui_VideoFrameReady);this.runtime.SkeletonFrameReady +=new EventHandler<SkeletonFrameReadyEventArgs>(nui_SkeletonFrameReady);}

The nui_SkeletonFrameReady event handler retrieves a frame of skeleton data. e.SkeletonFrame.Skeletons is an array of SkeletonData structures, each of which contains the data for a single skeleton. If the TrackingState field of the SkeletonData structure indicates that the skeleton is being tracked, a loop enumerates all of the joints in the SkeletonData structure. If the joint ID is equal to the head, the cyber helmet is resized based upon the user’s distance from the Kinect sensor. Then getDisplayPosition is called in order to convert coordinates in skeleton space to image space. The image of the cyber helmet is then drawn on the Canvas at the coordinates returned by getDisplayPosition.

private void nui_SkeletonFrameReady(object sender, SkeletonFrameReadyEventArgs e){if (this.Upgrade == true){foreach (SkeletonData data in e.SkeletonFrame.Skeletons){if (SkeletonTrackingState.Tracked == data.TrackingState){foreach (Joint joint in data.Joints){if (joint.Position.W < 0.6f){return;}if (joint.ID == JointID.Head){this.head.Height = this.head.MaxHeight / joint.Position.Z;this.head.Width = this.head.MaxWidth / joint.Position.Z;var point = this.getDisplayPosition(joint,(int)this.head.Width / 2, (int)this.head.Height / 2);Canvas.SetLeft(this.head, point.X);Canvas.SetTop(this.head, point.Y);}}}}}}

For an explanation of how the getDisplayPosition method works, see this previous post. The only slight change here is that the method has been modified to accept a width offset and a height offset as parameters, in order to handle the changing size of the cyber helmet based upon the distance of the user from the Kinect sensor.

private Point getDisplayPosition(Joint joint, int widthOffset, int heightOffset){int colourX, colourY;float depthX, depthY;this.runtime.SkeletonEngine.SkeletonToDepthImage(joint.Position,out depthX, out depthY);depthX = Math.Max(0, Math.Min(depthX * 320, 320));depthY = Math.Max(0, Math.Min(depthY * 240, 240));ImageViewArea imageView = new ImageViewArea();this.runtime.NuiCamera.GetColorPixelCoordinatesFromDepthPixel(ImageResolution.Resolution640x480, imageView, (int)depthX,(int)depthY, 0, out colourX, out colourY);return new Point((int)(this.canvas.Width * colourX / 640) - widthOffset,(int)(this.canvas.Height * colourY / 480) - heightOffset);}

Although not shown here, I’ve also refactored the stream access code into a library called KinectManager, which uses bindings to update the data on the UI.

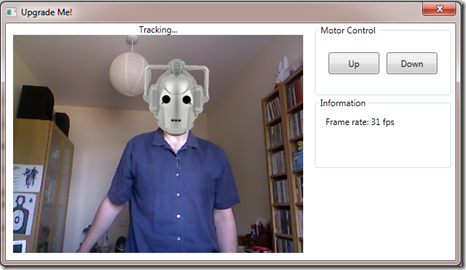

The application is shown below. When the user says ‘upgrade’, and provided that the skeleton tracking engine has identified a person, a cyber helmet is placed over the identified user’s head, with the helmet still covering the user’s head as they are tracked. Furthermore, the cyber helmet is resized to cover the user’s head based upon their distance from the Kinect sensor.

Conclusion

The Kinect for Windows SDK beta from Microsoft Research is a starter kit for application developers. It allows access to the Kinect sensor, and experimentation with its features. The sensor provides skeleton tracking data to the application, that can be used to accurately overlay an image on a specific part of a person in real time. Furthermore, depth data can be utilised to ensure that overlaid images are scaled correctly based upon the user’s distance from the sensor.

3 comments:

hey hi , thank you for the great tutorial , but i encounter this error when i try to run your tutorial

Error 1 The best overloaded method match for 'System.Windows.DependencyObject.OnPropertyChanged(System.Windows.DependencyPropertyChangedEventArgs)' has some invalid arguments

Error 1 Argument 1: cannot convert from 'string' to 'System.Windows.DependencyPropertyChangedEventArgs'

both these erros are in the line

this.OnPropertyChanged("Upgrade");

please help

Hi,

Thanks for the praise. I suspect the problem is caused by how you've implemented your OnPropertyChanged method. You can find a zip of my source at:

https://skydrive.live.com/redir.aspx?cid=1b98b9a3cc9eb1aa&resid=1B98B9A3CC9EB1AA!153&authkey=KJVwhBsFrcM%24

This will show you how I implemented OnPropertyChanged.

Regards,

Dave

Ahh Great :) , thanks i understand now .

I was trying to understand your "Gesture Recognition Pt 1" tutorial, but it is also giving me various error, if you could upload the source of that too , it would be very helpfull .

Thanks again ,

Best Regards ,

Rajat

Post a Comment