In my previous entry, I described how I created a SQL Server 2008 database that contains spatial data for the BeanieTracker Web application. In this article, I'll examine the Web application itself and discuss how to combine spatial data from SQL Server with the Microsoft Virtual Earth map control. The code for this article is available here.

The aim of the Beanie Tracker application is to use Virtual Earth map control to visualize the spatial data in the database in two ways: - Use push-pins to show the locations that Beanie has visited, and include a photograph and the distance from home in the tooltip displayed for each push-pin.

- Overlay countries Beanie has visited with a colored shape - the more often Beanie has visited the country, the darker the color of the shape.

The first step is to display the Virtual Earth map control in the Web page. The map control is freely available, and provides a Javascript-based API that you can use from pretty much any Web application to add mapping capabilities to your Web site. To include the control in your Web page, you need to do four things:

In the body of the Web page, create a <div> element where you want the map to be displayed:

<div id='mapDiv' style="position:relative; width:600px; height:600px;"/>

In the head of the page, add a reference to the Virtual Earth map control script library:

<script type="text/javascript" src="http://dev.virtualearth.net/mapcontrol/mapcontrol.ashx?v=6">

</script>

In the head of the page, add a script to display the map in the <div> area you created in step 1:

<script type="text/javascript">

var map = null;

// Display the map control

function GetMap()

{

map = new VEMap('mapDiv');

map.LoadMap();

map.SetCenterAndZoom(new VELatLong(0, 0), 1);

}

</script>

In the <body> tag, assign your script to the onload event:

<body onload="GetMap();">

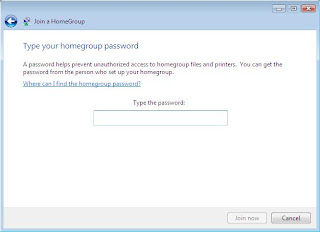

Using only this code, you can include a fully-functional Virtual Earth map control in your Web page. The control provides the ability for users to click and drag to move around the map, zoom by double-clicking or using a mouse scroll wheel, and change the view from a basic map, to arial view (based on satellite imagary) or even to birds-eye view (low level arial photography) where available. The map control is shown here:

On its own, the map control provides some interesting functionality, but for the Beanie Tracker application I want to use the control's API to visualize the data from the database. The key challenge here is to get the data from the database (which is on the server) to the Javascript used to render it on the map (which is in the browser). There are a number of ways to do this, including creating a Web Service or implementing a .aspx page that returns a text/xml document instead of HTML; but one of the most common is to implement a custom HTTP handler that returns the data as a GeoRSS feed, and consume that feed from the Javascript in the Web page. GeoRSS is an XML-based feed format for exchanging Geographic Markup Language (GML) elements across the Internet. Here's an example of a GeoRSS feed:

<?xml version="1.0" encoding="utf-8" ?>

<feed xmlns="http://www.w3.org/2005/Atom"

xmlns:georss="http://www.georss.org/georss"

xmlns:gml="http://www.opengis.net/gml">

<title>BeanieTracker</title>

<subtitle>Beanie Locations</subtitle>

<link href=http://www.graemesplace.com/beanietracker.htm/ />

<updated>10/11/2008 09:28:09</updated>

<author>

<name>Graeme Malcolm</name>

</author>

<entry>

<title>Beanie diving in the Red Sea</title>

<description><img src='Photo.aspx?PhotoID=1' height='100'/><p>4329.09 km from

home</p></description>

<georss:where>

<gml:Point xmlns="http://www.opengis.net/gml">

<gml:pos>27.8487 34.2865</gml:pos>

</gml:Point>

</georss:where>

</entry>

<entry>

<title>Beanie at Edinburgh Castle</title>

<description><img src='Photo.aspx?PhotoID=2' height='100'/><p>18.05 km from

home</p></description>

<georss:where>

<gml:Point xmlns="http://www.opengis.net/gml">

<gml:pos>55.9486 -3.2005</gml:pos>

</gml:Point>

</georss:where>

</entry>

</feed>

To get this feed to the browser, I created an HTTP handler that builds the XML for the feed, inserting the GML representations of the spatial data in the database; which are retrieved through some stored procedures in the database itself. Since there are two options that the user can use to retrieve a GeoRSS feed (one for photos of locations Beanie has visited, and one for a list of countries and a count of visits Beanie has made to them), I added some logic in the HTTP handler to look for a parameter, and create the appropriate feed with data from the corresponding stored procedure. The main sections of code (in VB .NET) from the handler are shown here:

Public Sub ProcessRequest(ByVal context As HttpContext) Implements

IHttpHandler.ProcessRequest

context.Response.ContentType = "text/xml"

Dim rssOutput As New System.Text.StringBuilder("<?xml version='1.0' encoding='utf-8'?>")

Try

'Build the GeoRSS feed

rssOutput.AppendLine("<feed xmlns='http://www.w3.org/2005/Atom'")

rssOutput.AppendLine("xmlns:georss='http://www.georss.org/georss'")

rssOutput.AppendLine("xmlns:gml='http://www.opengis.net/gml'>")

rssOutput.AppendLine("<title>BeanieTracker</title>")

rssOutput.AppendLine("<subtitle>Beanie Locations</subtitle>")

rssOutput.AppendLine("<link href='http://localhost/beanietracker/'/>")

rssOutput.AppendLine("<updated>" + System.DateTime.Now + "</updated>")

rssOutput.AppendLine("<author>")

rssOutput.AppendLine("<name>Graeme Malcolm</name>")

rssOutput.AppendLine("</author>")

Dim dataParam As String = context.Request.QueryString("data")

If Not dataParam Is Nothing Then

If dataParam = "locations" Then

GetPhotos(rssOutput)

ElseIf dataParam = "countries" Then

GetCountries(rssOutput)

Else

Throw New Exception("Invalid parameter")

End If

End If

'Close the <feed> document and send it as the response

rssOutput.Append("</feed>")

context.Response.Write(rssOutput.ToString())

Catch e As Exception

OutputError(e.ToString(), context)

End Try

End Sub

Private Sub GetPhotos(ByRef rssOutput As System.Text.StringBuilder)

Using sqlConn As New SqlConnection(connStr)

'Open a connection to the database

sqlConn.Open()

Dim spName As String

'Use the GetPhotosGML stored proc to get all stores by default

spName = "GetPhotosGML"

Using cmd As New SqlCommand()

cmd.Connection = sqlConn

cmd.CommandType = Data.CommandType.StoredProcedure

'Specify the stored procedure name as the command text

cmd.CommandText = spName

Using geomRdr As SqlDataReader = cmd.ExecuteReader()

'Read the DataReader to process each row

While (geomRdr.Read())

'Create an <entry> element for this row

rssOutput.AppendLine("<entry>")

rssOutput.AppendLine(String.Format("<title>{0}</title>", geomRdr.GetValue(1)))

rssOutput.AppendLine(String.Format("<description><img

src='Photo.aspx?PhotoID={0}' height='100'/><p>{1} km from

home</p></description>", _

geomRdr.GetValue(0), geomRdr.GetValue(3)))

'Add a <georss:where> element

rssOutput.AppendLine("<georss:where>")

Dim gml As String

'Get the geography instance GML from column 2

gml = geomRdr.GetValue(2).ToString()

'Append the gml: prefix to all the elements due to VE parsing behavior

gml = gml.Replace("<", "<gml:")

gml = gml.Replace("gml:/", "/gml:")

'Add the <gml:> elements to the output XML

rssOutput.AppendLine(gml)

'Close <georss:where> and <entry> elements

rssOutput.AppendLine("</georss:where>")

rssOutput.AppendLine("</entry>")

End While

End Using

End Using

End Using

End Sub

Private Sub GetCountries(ByRef rssOutput As System.Text.StringBuilder)

Using sqlConn As New SqlConnection(connStr)

'Open a connection to the database

sqlConn.Open()

Dim spName As String

'Use the GetPhotosGML stored proc to get all stores by default

spName = "GetCountriesGML"

Using cmd As New SqlCommand()

cmd.Connection = sqlConn

cmd.CommandType = Data.CommandType.StoredProcedure

'Specify the stored procedure name as the command text

cmd.CommandText = spName

Using geomRdr As SqlDataReader = cmd.ExecuteReader()

'Read the DataReader to process each row

While (geomRdr.Read())

'Create an <entry> element for this row

rssOutput.AppendLine("<entry>")

'Use columns 0 and 1 for the title and description

rssOutput.AppendLine(String.Format("<title>{0}</title>", geomRdr.GetValue(1)))

rssOutput.AppendLine(String.Format("<description>{0}</description>", _

geomRdr.GetValue(3)))

'Add a <georss:where> element

rssOutput.AppendLine("<georss:where>")

Dim gml As String

'Get the geography instance GML from column 2

gml = geomRdr.GetValue(2).ToString()

'Append the gml: prefix to all the elements due to VE parsing behavior

gml = gml.Replace("<", "<gml:")

gml = gml.Replace("gml:/", "/gml:")

'Add the <gml:> elements to the output XML

rssOutput.AppendLine(gml)

'Close <georss:where> and <entry> elements

rssOutput.AppendLine("</georss:where>")

rssOutput.AppendLine("</entry>")

End While

End Using

End Using

End Using

End Sub

The code merges the GML results from the stored procedures into an XML feed, which is built up using simple string concatenation. You could use an XmlDocument or XmlWriter for this (or you could even use SQL Server's native XML support to generate the complete XML in the stored procedures), but this approach makes it easier to see what's going on. Note that SQL Server includes a gml:http://www.opengis.net/gml namespace declaration in the query results. However, the Virtual Earth map contol appears to require that all namespaces be declared in the root element of the feed, so I had to add code to strip out the namespace in the query results.

Next I needed to register the HTTP handler so that any requests for URLs with the extension .georss are handled by the custom handler, and not treated as regular ASP.NET pages. There's nothing special about the extension .georss by the way, I just chose to use that for my handler. To register a custom handler, you need to edit the Web.Config of your ASP.NET application. The specific entry you need to create depends on whether you are using IIS 6 or IIS 7 - see this KB article for more information. The configuration I used is shown here:

<httpHandlers>

<!--Register the GeoRSSHandler for .georss requests -->

<add verb="*" path="*.georss" type="GeoRSSHandler" validate="false"/>

</httpHandlers>

Finally, I needed to create some client-side Javascript to retrieve the feed and import it into the Virtual Earth map control. Here are the functions to do this (one to retrieve the photo locations feed, and one to retrieve the countries feed):

function ShowBeanieLocations()

{

try

{

map.DeleteAllShapes();

// Import GeoRSS feed of location data, and call onBeanieLocationsLoad function

when the data is loaded

var veLayerSpec = new VEShapeSourceSpecification(VEDataType.GeoRSS,"./Beanie.georss?data=locations");

map.ImportShapeLayerData(veLayerSpec, onBeanieLocationsLoad, true);

}

catch(e)

{

document.getElementById("Info").innerHTML = e.Message;

}

}

function ShowCountries()

{

try

{

map.DeleteAllShapes();

// Import GeoRSS feed of country data, and call onCountriesLoad function when the data is loaded

var veLayerSpec = new VEShapeSourceSpecification(VEDataType.GeoRSS,"./Beanie.georss?data=countries");

map.ImportShapeLayerData(veLayerSpec, onCountriesLoad, true);

}

catch (e)

{

document.getElementById("Info").innerHTML = e.Message;

}

}

Both of these functions follow the same basic procedure:

- Clear all existing shapes from the map control

- Retrieve the feed by requesting a .georss file wth the appropriate parameter (note that the actual file name is unimportant, the custom handler handles all .georss requests)

- Import the feed into the map control as a shape layer by using the ImportShapeLayerData method

The ImportShapeLayerData method is asynchronous, so a callback function to be run when the impoprt has completed is specified. Here are the callback functions in the Beanie Tracker application:

function onBeanieLocationsLoad(feed)

{

// Count the shapes returned

var locationcount = feed.GetShapeCount();

document.getElementById("Info").innerHTML = 'Beanie has visited ' +

locationcount + ' places.';

}

function onCountriesLoad(feed)

{

/ / Count the shapes returned

var locationcount = feed.GetShapeCount();

for (i=0;i<locationcount;i++)

{

var s = feed.GetShapeByIndex(i);

s.SetLineColor(new VEColor(0, 0, 0, 0));

var numVisits = s.GetDescription();

s.SetDescription("Beanie has visited this country " + numVisits + " times.");

var transparency = (numVisits % 9) / 10;

s.SetFillColor(new VEColor(255, 50, 100, transparency));

s.SetCustomIcon("<img src='images/transparentIcon.png'>" + numVisits +

"</img>");

}

document.getElementById("Info").innerHTML = 'The darker the country, the more times Beanie has visited it.';

}

The callback function for the photo location data feed just counts the number of shapes (which will be rendered as push pins on the map by default since they are all points defined by a single lat/long pair) and displays the total. The callback for the countries data is a little more complicated - The elements returned are polygons, and so will be rendered as shapes of countries on the map; the code changes the transparency property of each shape to reflect the number of visits Beanie has made to the country, so that the more visits Beanie has made, the darker the country will appear. Note that by default a pushpin is displayed in the center of the shape, but I've used the SetCustomIcon method to specify that a transparent image should be used instead and included the number of visits as text, so that's what appears on the map.

The last step is to hook the methods that retrieve the feeds up to the buttons on the Web page:

<input id="Button1" type="button" value="Show Beanie Locations"

onclick="javascript:ShowBeanieLocations()" />

<input id="Button2" type="button" value="Count Locations by Country:"

onclick="javascript:ShowCountries()"/>

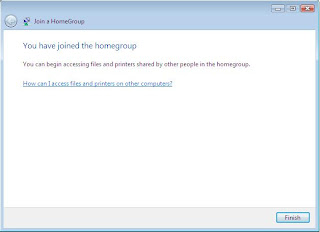

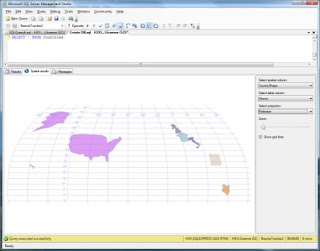

The resulting page is shown here:

One minor issue I had to deal with is that the ImportShapeLayerData method of the Virtual Earth map control does not seem to handle MultiSurface GML elements in the GeoRSS feed. I had to change the spatial data for countries with multiple land masses to a single polygon in the database by adding a very thin line between each land mass. If anyone knows of a workaround for this, I'd love to hear about it!

Finally, click the Transform All Templates button.

Finally, click the Transform All Templates button.