(cross-posted from The Blog @ Graemesplace)

In a previous post, I described how you can use Data Quality Services (DQS) to create a knowledge base for the domains (data columns) used in your business data and use it to cleanse data by correcting invalid or inconsistent values. Data cleansing is however only one side of the coin when it comes to DQS. You can also use DQS to perform data matching – in other words, finding records that potential duplicates of one another and consolidating them to a single surviving record.

When you think about it, the potential for duplicate data entry in most complex business environments is enormous. For example, let’s imagine an e-commerce site where customers need to register before placing orders. It’s perfectly conceivable that a customer who only uses the site occasionally might re-register with slightly different details because they’ve forgotten that they had registered previously or can’t remember their login credentials. Even if the site applies a policy that demands a unique email address for each registration, there’s nothing to stop the same customer registering multiple times with different email addresses. For an individual sales order, the fact that the customer is registered multiple times is inconsequential – as long as the payment and delivery address details are correct, the order can be processed successfully. However, then the company wants to use its data to perform any kind of business intelligence (BI) reporting or analysis that aggregates information per customer, then the duplicate entries can lead to misleading results.

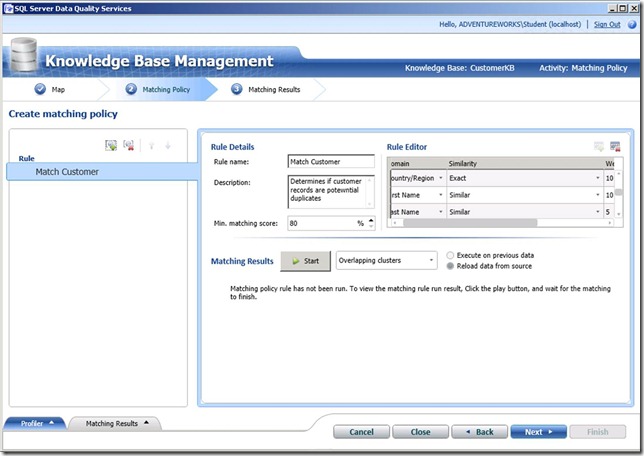

To use DQS to match data, you must first add a matching policy to a knowledge base. You can use an existing knowledge base that is also used for data cleansing, or you can create a knowledge base specifically for data matching. In this example, I’m opening an existing knowledge base that contains domains for customer records for the Matching Policy activity.

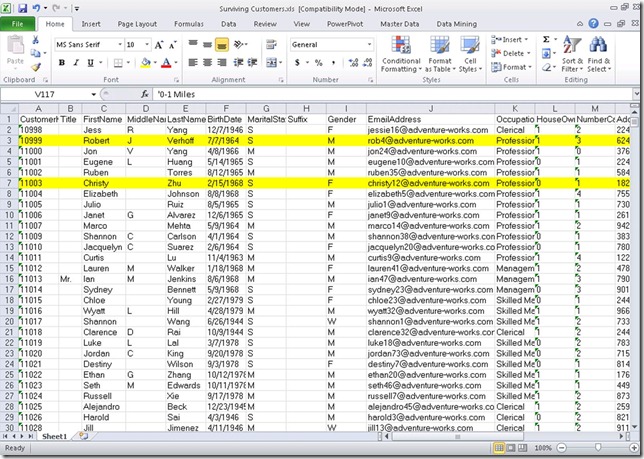

Just as when performing knowledge discovery, I need to map some sample data to the domains defined in the knowledge base. This enables me to test the matching policy against a known data set as I build it, and therefore verify that it successfully identifies known duplicate records. In this case, I’m using data in an Excel workbook as the source for my sample data, but you can also use a table in a SQL Server database.

Having mapped sample data to the domains, I can now define the matching rules for my matching policy. You can include multiple rules, and each one uses a set of weighted comparisons of domain values to identify clusters of records that are potential duplicates of one another.

Potential matches are determines based on a score that is calculated from the weighted comparisons you define in the rule. Here are the comparisons I’ve used in my Match Customer rule:

| Domain | Similarity | Weight | Prerequisite |

| Birth Date | Exact | X | |

| Email Address | Exact | 20 | |

| Postal Code | Exact | 10 | |

| Country/Region | Exact | 10 | |

| First Name | Similar | 10 | |

| Last Name | Similar | 10 | |

| Street Address | Similar | 20 | |

| City | Similar | 10 | |

| State | Similar | 10 |

Note that an exact match of the Birth Date domain is specified as a prerequisite. In other words, only records where the birth date is an exact match will be considered as candidates for a potential duplicate. Prerequisite domains in a matching rule must use the Exact similarity and have no weighting value. All of the other domains are calculated based on an exact or similar match, and have weightings, which add up to a total of 100.

Assuming the birth date for the records being compared is a match, DQS then makes the other comparisons defined in the matching rule and adds the specified weighting value for each comparison that is true to produce an overall score. For example, consider two records with identical Birth Date values being compared using the Match Customer rule defined above. If the Email Address domains for both records is an exact match, 20 is added to the score. If the First Name domains are similar (for example, “Rob” and “Robert”), another 10 is added to the score, and so on until all of the comparisons in the rule have been made. The resulting score is then compared to the minimum matching score defined for the matching rule (in this case 80). If the score exceeds the minimum matching score, then the records are considered a match. Multiple records that are considered matches for one another are grouped into a cluster.

After you have defined the matching rules, you can use them to find matches in the sample data you mapped earlier. This gives you the opportunity to verify that the rules behave as expected against a known dataset. In this case, the dataset results in a single cluster of matches that includes two records – one for Daniel Garcia and another for Dan Garcia.

Now that I’ve defined my matching policy, I can publish the knowledge base and allow the data stewards in my organization to use it for data matching.

To use a knowledge base to perform data matching, create a new data quality project, specify the knowledge base, and specify the Matching activity as shown here.

The first step, as it is in any DQS project, is to map the fields in your data source to the domains in the knowledge base. Just as before, the data source can be a table in a SQL Server database or an Excel file. This time, I’m using the Customers table in the Staging SQL Server database.

After you’ve mapped the domains, you can start the matching process. When the process is complete, the clusters of matched records is displayed. In this case, there are two clusters, each containing two matches. At tis stage, you can choose to reject any matches that you know aren’t duplicates.

When the matches have all been identified, you can export the results to a SQL Server table or an Excel file. You can also export survivors (one record from each cluster that is chosen as the correct one) based on one of the following survivorship rules:

- Pivot record – A record in the cluster that is chosen arbitrarily by DQS.

- Most complete and longest record – The record that has the fewest null field values and the longest overall data length.

- Most complete record – The record that has the fewest null fields.

- Longest record – The record that has the longest overall data length.

The exported results include all of the source data with additional columns for the clusters of matching records to indicate the matching rule used and score calculated for each match, and the pivot record for each match cluster.

The exported survivors contain all of the non-matching records from the original data source and one version of each matched record based on the survivorship rule you selected. In the following example, I’ve highlighted the surviving records from my matching process.

In some case, you can simply replace the original data set with the survivor records to create a de-duplicated set of records. However, in most business scenarios you’ll need to apply some logic (manual or automated) to handle relationships between duplicate records and other tables. For example, before I eliminate the duplicate customer records identified by the matching process in the above example, I would need to reassign any sales orders that are currently related to those customer records to the surviving records.

Hopefully you’ve found this brief introduction to data matching with DQS useful. To learn more about DQS and its use in a data warehousing solution, you can attend Microsoft Official Curriculum (MOC) course 10777A: Implementing a Data Warehouse with SQL Server 2012.

No comments:

Post a Comment