(cross-posted from The Blog at Graemesplace)

It’s just typical of my job that just a few short weeks after the publication of some Microsoft Official Curriculum courses that I’ve spent months working on, Microsoft should choose to make a preview of the next release of the software on which they are based available! As you may know, we recently published courses 10778A and 40009A, both of which make use of the PowerPivot and Power View features in Excel and SharePoint 2010; so it was with a certain amount of trepidation that I installed the preview of Office 2013 to get a first look at the enhancements that have been made.

The first, and most obvious, change is that the PowerPivot add-in for Excel no longer needs to be installed from a separate package. It’s built into Excel and only needs to be enabled, which you do by configuring the COM Add-ins in Excel’s options as shown here.

Note that there’s also a Power View add-in – more about that later!

After the PowerPivot add-in has been enabled, users will see the POWERPIVOT tab on the ribbon, as shown here.

With this ribbon, you can not only manage a PowerPivot tabular data model for the workbook as you can in Excel 2010, but you can also create calculated fields and KPIs without having to directly edit the model – making the process a little bit more intuitive for information workers.

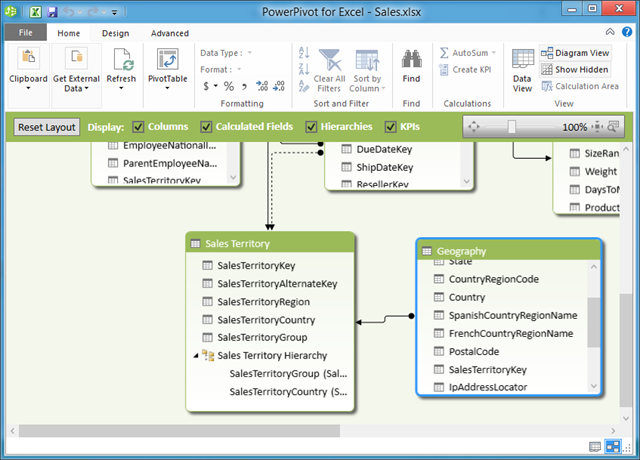

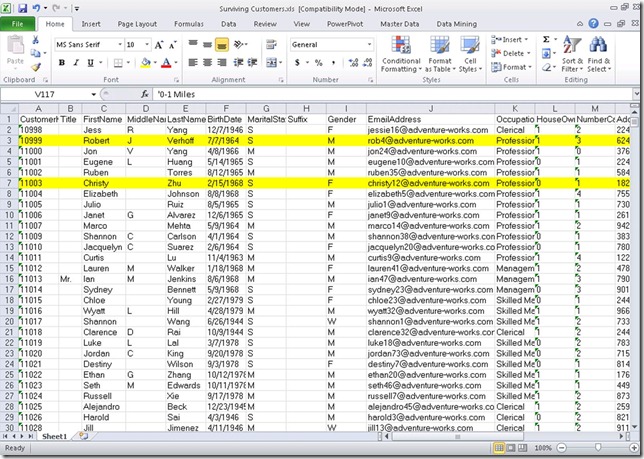

Clicking Manage opens the PowerPivot window, which is similar to that of the previous release. There are a few enhancements of course, but anyone familiar with PowerPivot in Excel 2010 will find themselves in familiar territory. In this case, I’ve opened a PowerPivot workbook I created with Excel 2010 based on data in the AdventureWorksDW SQL Server sample database. The changes to this release meant that I was prompted to allow Excel to update the data model and re-save the workbook, so one thing to be aware of is that you can open (and update) Excel 2010 PowerPivot workbooks in Excel 2013, but after they’ve been updated you won’t be able to open them in Excel 2010. You can see the diagram view of my PowerPivot data model below – note that it includes a hierarchy in the Sales Territory table.

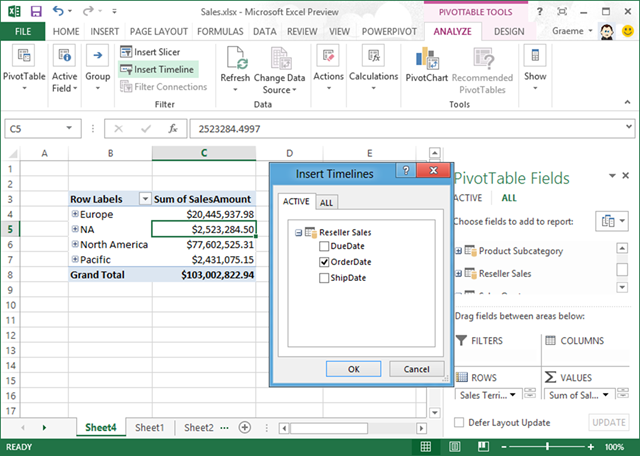

After you’ve created the data model in your workbook, you can use it as a source for PivotTables, just as you could in Excel 2010. There are however, one or two nice enhancements on a new ANALYZE tab of the ribbon that make it easier to do things like create slicers. Another new feature is the ability to create timeline filters that make it easier to analyse data based on chronological periods. To add a timeline, just click Insert Timeline and specify any of the time-based attributes that Excel identifies as having a suitable relationship in the model.

After you’ve inserted a timeline, you can use it to filter the data in the PivotTable as shown here.

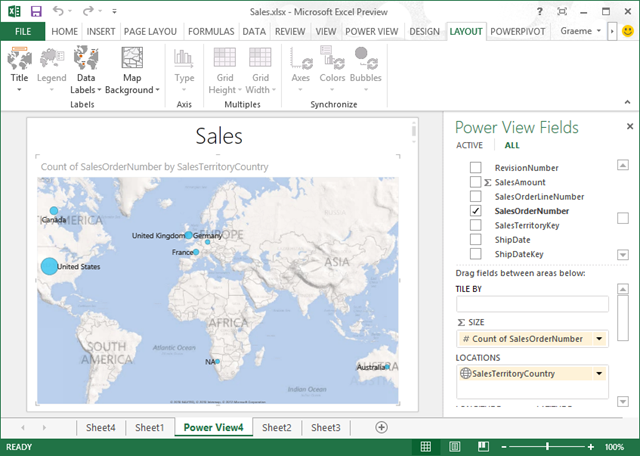

Earlier, I mentioned that Excel 2013 includes a Power View add-in. This enables information workers to create Power View reports from the data model in the workbook (and external data sources). Previously, Power View was only available in SharePoint Server 2010, but in Office 2013 you can use it right there in an Excel workbook.

To create a Power View report from the data model in the workbook, just click Power View on the INSERT tab of the ribbon.

If necessary, you’ll be prompted to install Silverlight (which is required by the Power View add-in), and after doing so you’ll be able to create a Power View report from the data in your PowerPivot data model as shown here.

Note that you can include hierarchies in a Power View report, which wasn’t supported in the previous release. There are several other enhancements in this release, including support for new data visualizations (such as pie charts), and even visualization of geographical data on a Bing Maps map, as shown here.

This short article just highlights a few of the improvements to PowerPivot and Power View in Excel 2013. There are many more new features in Excel, as well as greater ability to share BI capabilities across the enterprise through enhancements in SharePoint 2013 and SQL Server 2012 SP1, which I look forward to exploring in more depth.