Some previous posts in this blog have discussed new features in the SQL Server “Denali” database engine. For this post however, I want to focus on some of the key enhancements in SQL Server “Denali” Integration Services (SSIS). SSIS first appeared in SQL Server 2005 as an evolution of the Data Transformation Services (DTS) component in previous releases, and has steadily become a core element of Extract, Transform, and Load (ETL) operations for many data warehousing implementations.

The big news in the “Denali” release of SQL Server Integration Services, is a whole new deployment model for SSIS solutions. In previous releases, the only available unit of deployment is the package (a .dtsx file), and this could be deployed either to the file system or to the MSDB database in a SQL Server instance. This single-package deployment model is at-odds with the development model for SSIS solutions, in which a developer can create a single project that contains multiple packages. Prior to “Denali”, each package must be deployed and any variables that need to be set at runtime must be managed through a package configuration for each individual package. SSIS in “Denali” still supports this “legacy” deployment model, but now also supports project-level deployment to a the new Integration Services Catalog, and project-level parameters that can be used to set variables across multiple packages within a project.

The first thing you need to do to take advantage of this new deployment model, is to create an Integration Services catalog on an instance of SQL Server. The Integration Services catalog is a central database in which SSIS projects can be stored and managed, and you can have one catalog per SQL Server instance. The Integration Services catalog uses the SQLCLR (the .NET common language runtime hosted within SQL Server), so you need to enable this first by using the following Transact-SQL:

sp_configure 'show advanced options', 1;

GO

RECONFIGURE;

GO

sp_configure 'clr enabled', 1;

GO

RECONFIGURE;

GO

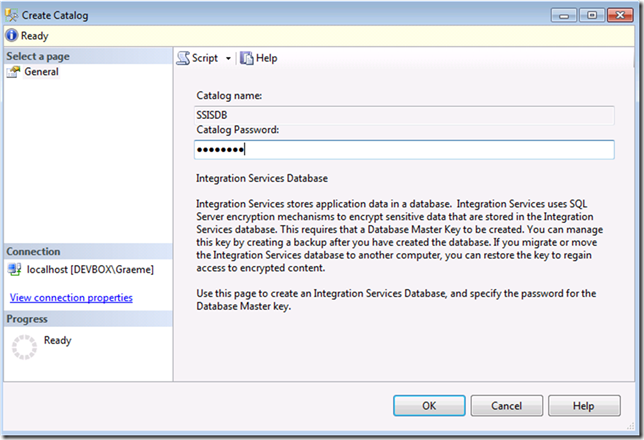

Now you’re ready to create an Integration Services catalog, which you can in SQL Server Management Studio as shown here.

When you create the Integration Services catalog, you’re prompted for a password that can be used to protect the databases master key used to encrypt the data.

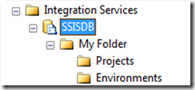

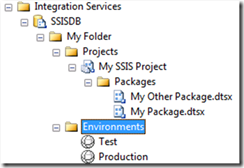

After clicking OK, refreshing the Object Explorer view reveals two new items as shown here. The first is a database named SSISDB, and the second is an SSISDB node beneath the Integration Services folder. The database is a regular SQL Server database that contains a number of tables, views, and stored procedures that you can use to manage and run SSIS projects and packages stored in the catalog. It is also where the projects and packages in your catalog are physically stored. The SSISDB node under the Integration Services folder provides a management interface for the catalog and enables you to define a logical folder structure for your catalog.

To create a folder in your catalog, simply right-click the SSISDB node under the Integration Services folder, and click Create Folder. Here I’ve created a folder with the imaginative name My Folder. Note that subfolders named Projects and Environments have automatically been created – we’ll return to these later.

OK, so now we have an Integration Services catalog that contains a folder to which we can deploy an SSIS project; so I guess it’s time we went ahead and created a project to deploy. For our purposes, we’ll create a simple SSIS project that includes a data flow task that extracts the list of database names from the sysdatabases system view in the master database and copies it to a table in another database. I’m going to copy the database list to a table in a database called CmSampleDB, and to make matters a little more interesting, I’m going to create two tables that can act as the destination for the list – one to be used when testing the SSIS solution, and another to be used in production. We’ll design the SSIS project to support a project-level parameter so you can specify which table to use at runtime. Here’s my Transact-SQL code to create the destination tables:

USE CmSampleDB

GO

CREATE TABLE TestDBList

(name nvarchar(250))

GO

CREATE TABLE DBList

(name nvarchar(250))

GO

Now we can go ahead and create the SSIS project using SQL Server Business Intelligence Development Studio (BIDS). Creating an SSIS project in “Denali” is exactly the same as in previous versions, just select the Integration Services Project template as shown here:

When the new project is created, it will contains a single package named Package.dtsx, which you can rename to suit your own requirements – I’m going

So far, nothing is very different from how you would create an SSIS project in previous releases of SQL Server, but here’s where we’re going to use a new feature – Project Parameters. Project parameters are, as the name suggests, parameters that can be used to pass variable values to the project at runtime. Because these parameters are scoped at the project level, they can be used by any package in the project. To add a project parameter, right-click the project in Solution Explorer and click Project Parameters, or click Project Parameters on the Project menu. Either of these actions displays the Parameters pane as shown here:

As you can see, I’ve used this pane to create a project-level parameter named TableName with a default value of TestDBList. This default value is more correctly known as the Design default value, since it’s used when I run the project within BIDS. When I deploy the project, I can set a Server default value that will override this one when packages in this project are run on the server.

Now I need to create the data flow task that copies the database names from sysdatabases in the master database to the table indicated by the TableName parameter in the CmSampleDB database. To do this I just need to drag a Data Flow task to the design surface of My Package.dtsx as shown here:

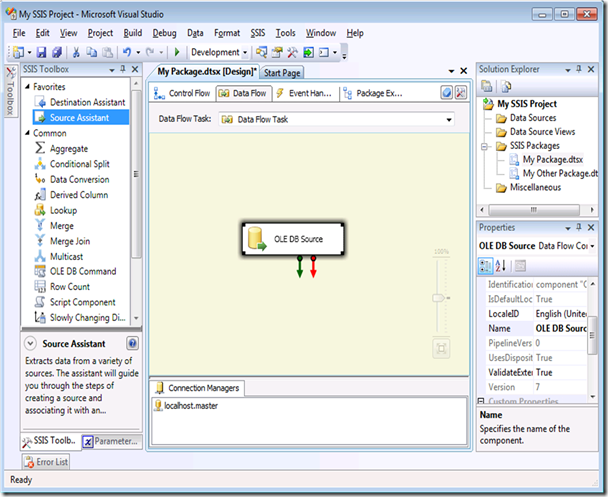

Next, I’ll double-click the data flow task to view the data flow design surface, and use the Source Assistant item on the SSIS Toolbox to create a new connection to the master database on my SQL Server instance. Then I can configure the OLE DB source that gets created to extract the name column from the sysdatabases system view by using the following SQL command:

SELECT name FROM sysdatabases

The data flow surface now looks like this:

Next I’ll use the Destination Assistant to add a connection to the CmSampleDB database on my SQL Server instance, and connect the output from the source to the destination as shown here:

To complete the data flow, I need to configure the destination to insert the output from the source into the table specified in the project-level TableName parameter, as shown here:

Now I’m ready to build and deploy the project to the Integration Services catalog I created earlier. Building the project in BIDS creates a .ispac file, which you can then import into the catalog using SQL Server Management Studio, or deploy directly to the catalog from BIDS by clicking Deploy on the Project menu (or by right-clicking the project in Solution Explorer and clicking Deploy). Whichever approach you use, deployment to the catalog is accomplished via the Integration Services Deployment Wizard. After the Welcome screen, the wizard prompts you to select the project you want to deploy – in this case, the .ispac file I just built.

Next, the wizard loads and validates the project before prompting you for the destination. This consists of the server where the Integration Services catalog is hosted, and the path to the folder where you want to deploy the project. Here, I’ve select the My folder folder i created earlier.

Finally, the wizard prompts you to set Server default values for any project parameters. You can use the Design default value, specify a new value, or use an environment variable. We’ll look at environment variables shortly, but for now I’ve set the Server default value for the TableName parameter to DBList.

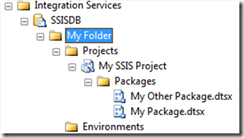

Completing the wizard deploys the project to the catalog, which you can verify in SQL Server Management Studio. note that the project is actually saved to the Projects sub-folder of the path specified in the wizard, and that all packages within the project are deployed as a single unit.

The final thing I want to do is to define a test environment and a production environment that can be used to control the execution context for the project. To do this, I’ll right-click the Environments folder and click Create Environment. Using this approach I’ve created two environments called Test and Production.

You can edit the properties of each environment to create environment variables, which in turn can be used to set project parameters when project packages are run in the context of the environment. For example, here I’m creating an environment variable named tName in the Test environment with a value of TestDBList. I’ve also created an environment variable with the same name in the Production environment and assigned the value DBList.

Finally, I can hook the environments up to the project by editing the properties of the project in the Integration Services catalog and adding environment references, as shown here…

… and setting parameters to get their values from environment variables as shown here (note that in the CTP release, you must click OK after adding the environment references on the References page before re-opening the Properties window and changing the parameter value on the Parameters page):

So now we have a project deployed to our Integration Services catalog. The project contains two packages - one of which doesn’t actually do anything, and another that copies the list of database names from the sysdatabase system view in the master database to a table in the CmSampleDB database. There is a project-level parameter that is used to indicate which table the database names should be copied to, and this is set to TestDBList or DBList depending on the environment that the package is executed in. To test this, I can right-click My Package.dtsx in Object Explorer and click Run, which produces the following dialog box:

Note that I can select the environment reference I want to use, which will determine the value of the tName environment variable, which will in turn set the value for the TableName project parameter and ultimately determine which table the data is copied to. For this example, I’ll select the Test environment and run the package, and the data is coped to the TestDBList table as shown below:

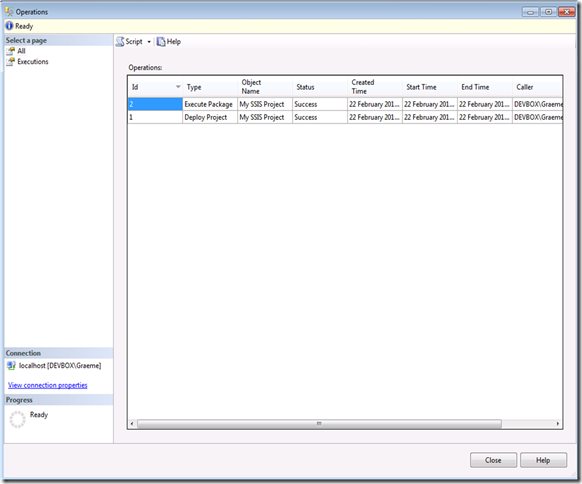

To review past operations in the catalog, you can right-click SSISDB under the Integration Services folder in Object Explorer and click Operations. This shows a list of all operations that have been performed in the catalog in reverse order of occurrence, so in this example you can see that a project was deployed and then a package was executed.

Double-clicking an entry in the list reveals more information about the operation. For example, here’s the details for the package execution:

Note that clicking the Parameters tab shows the parameter values that were used for this particular execution:

I’ve used this article to give you a quick tour of the new deployment model for SSIS and how you can use project parameters and environment variables to create a more flexible but manageable ETL solution with SSIS in SQL Server “Denali”. For more information about what’s new in SSIS in “Denali”, see SQL Server Books Online.

No comments:

Post a Comment