Welcome to the first of a three part series of posts that aim to explore the new SQL Data Services (SDS) platform from Microsoft.

In this first post, we will explore the Azure services platform and where SDS fits into this exiting range of new cloud based services. we will then explore the benefits of utilising SDS within your applications before looking at the data model the service adopts and the protocols used to access your data. we will then finish by exploring the pre-requisites and developer SDK to set the scene for for subsequent posts. Lets get started!

The end of October saw developers from all over the world gather in Los Angeles for what was Microsoft’s biggest event of 2008 – The Professional Developer Conference.

It was here that Microsoft unveiled their newest wave of technologies to the developers in attendance and the millions watching worldwide. One of the biggest announcements of the whole event came on the very first day with the official unveiling of Microsoft’s Cloud Computing venture – The Azure Services platform.

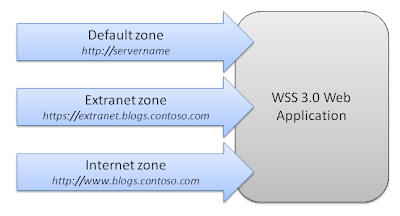

Azure is a brand new hosted platform that provides scalable hosting for your Web based applications. At the heart of the platform is Windows Azure - the cloud based operating system that serves as the development, service hosting and service management environment for the Azure Services.

The Azure services are subsets of functionality that relate to the larger, on premise versions of the particular platforms. These subsets are designed to offer the same capabilities but serve them as service to be consumed by your applications. Currently, the platform offer functionality in the form of Live Services, .NET Services, SQL Services and SharePoint and Dynamic CRM Services.

The SQL Services platform and essentially extends SQL to the cloud with tweaks to make it scale over thousands of servers and allow you to store, retrieve, and manipulate any amount of data, from a few kilobytes to several terabytes.

The current beta level distribution stands at around 1200 servers geographically spread over 5 data centres!

But why should you use or even care about this exiting new service? You’re on premise database servers have served you well for years so how will this new model be of any benefit to you as a developer? here is a breakdown of three key areas that help demonstrate how cool this new platform really is.

Flexibility and Scale

A big push for this platform is the idea of flexibility and scale. When building your applications, infrastructure limits are no longer a problem as the data store will scale dynamically to any size.

The API is accessible through two standard based interfaces – Simple Object Access Protocol (SOAP) and Representation State Transfer (REST). Using these interfaces enables interaction with the services to be language and platform independent and you can access your data from any place at any time from any device that supports HTTP.

From a business perspective, the "pay as you grow" service model helps to keep your start-up costs low and ensures that you only pay for the storage you use, which results in a lower total cost of ownership (TCO).

Reliability and Security

One of the big concerns I had about the entire platform (not just SDS) was the security and reliability issues. Trusting Microsoft with large amounts of your corporate data is a big step for any company large or small.

To address this issue, Microsoft have taken the step of building these new services on top of the SQL Server and Windows Server 2008 technology stack to give you the same tried and tested performance you come to expect from these products. This, coupled with published service level agreements can help ensure enterprise-class performance and reliability.

Developer Agility

With the SOAP and REST protocols already implemented and other protocols (such as JavaScript Object Notation (JSON) and Atom Publishing Protocol (AtomPub) ) on the way it is very much a free for all development experience allowing you to use whatever tools and platform you feel comfortable with.

Where the platform really shines is in its flexible data model which doesn’t require any schemas. There is no need to create complex table column and relationship database structures. SDS supports familiar String, Decimal, Boolean, DateTime, and Binary property data types, and you can also store virtually any type of content as a binary large object (BLOB).

The Ace Model

SDS offers a simple (and really easy to understand) data model that gives you complete control over the way your data is expressed and related. There is no forced relational schema but instead the data model is presented as The ACE model – Authorities, Containers and Entities

Check out the following table that describes how each ACE element relates to each other and how they can be thought of if you are familiar with the normal SQL Server.

Business Logic Layer | Definition | Purpose | SQL Server Analogy |

Authority | Set of containers | Groups containers for accounting, security, and

co-location | A SQL Server instance

|

Container

| Set of entities | Groups entities for content and queries | An individual database

|

Entity | Scalar property bag

| A unit of storage | An individual record

|

Just to put this into a bit of context. If we were building a system for a food supermarket we could have an authority for each store with a container for each product type (Fruit, Vegetables, Bakery etc) and our entities would become the individual records under each of these entities with each entity having a Name, Price and current items in stock for example.

Data Access

One of the major design goals of SDS was to enable communication from any programming environment. To enable this, SDS currently uses two protocols for communicating with the service – SOAP and REST

The SOAP protocol is familiar to many developers who consume Web services, is language and platform independent, and is available in any development environment that provides access to a SOAP stack. SDS is also very well supported by the Microsoft Visual Studio® tools. Developers typically use SOAP when developing for a Microsoft-based environment, especially in enterprise applications where security and interoperability are important.

REST is quite different to soap. It is a lightweight HTTP-based protocol that uses URIs to facilitate the exchange of data. This means you can use REST from any environment that has access to the HTTP stack and this includes the web browser

Prerequisites and SDK

To get started with development, you will need a CTP account with associated credentials to access your online solution. at the time of writing, there are two methods of obtaining an account. If you live in the US, you can visit the

SQL Data Services Developer Center and click the “New Customer Sign up” on the left hand side and you will be taken through the process of signing up. Note that while this is free, you will need a credit card for identification and validation purposes. from what i have read elsewhere, this is done by charging $1 to your card.

If, however, you are like me and based outside the US you can sign up for the CTP of the Azure Services as a whole (which includes SDS) by visiting the

Azure Services Platform site. this is also free and requires no credit card.

Once you have your account, you are all set to start your SDS adventures. To help you on your way, there is an SDK that provides a few tools and documentation links that are extremely useful. You can download a copy of the SDK from

here.

The main feature of the SDK is the SDSExplorer tool. This tool provides a GUI for interacting with the data stored within your SDS account and executes operations using the REST protocol.

In the next post in the series, will will explore some code that will allow you to execute SDS commands programmatically using REST.